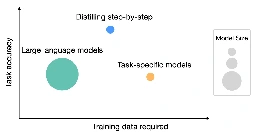

Distilling step-by-step: Outperforming larger language models with less training data and smaller model sizes

Distilling step-by-step: Outperforming larger language models with less training data and smaller model sizes

blog.research.google

Distilling step-by-step: Outperforming larger language models with less training data and smaller model sizes