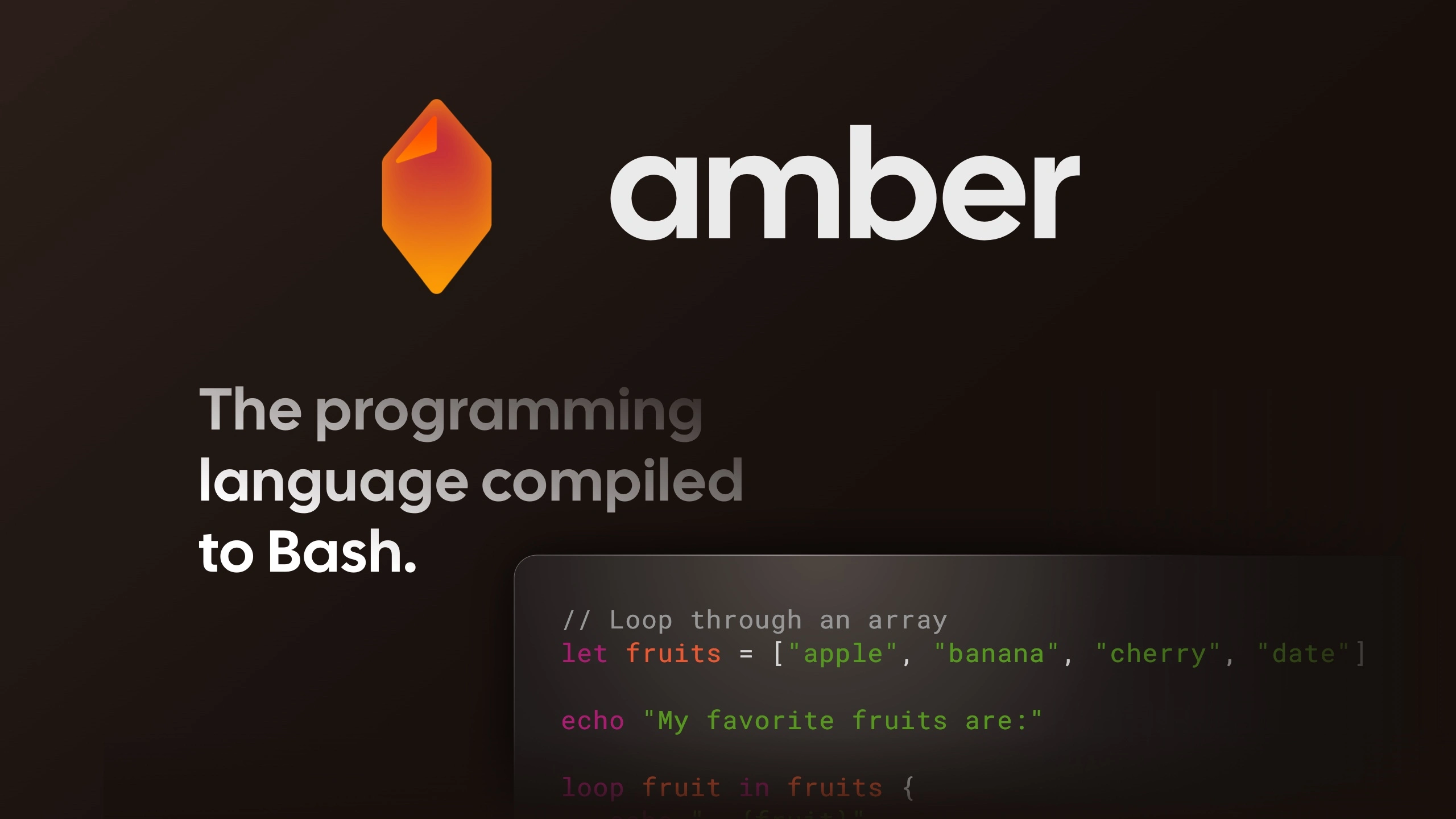

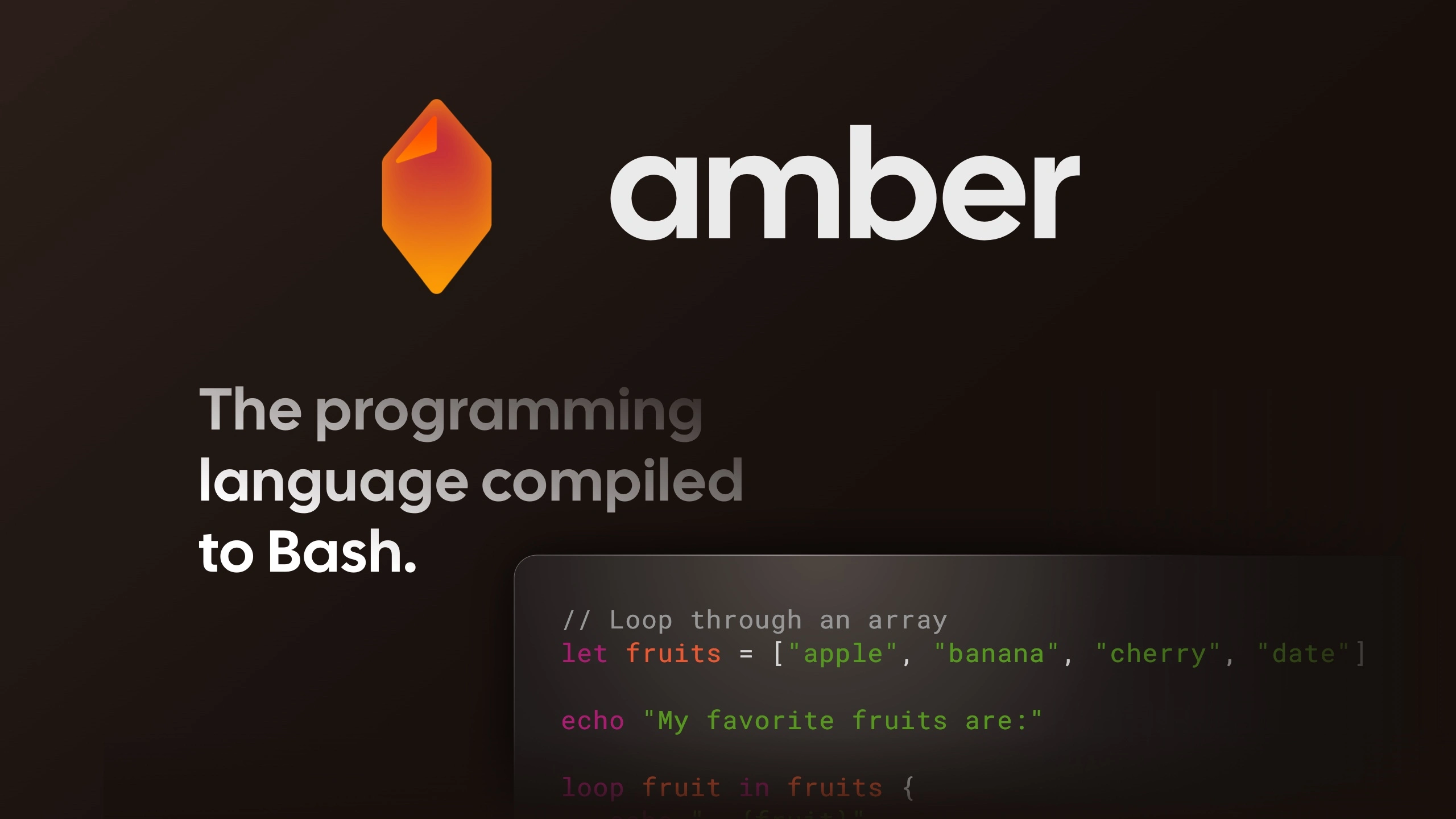

Amber - the programming language compiled to Bash

Amber - the programming language compiled to Bash

amber-lang.com

Amber The Programming Language

New favorite tool 😍

Amber - the programming language compiled to Bash

Amber The Programming Language

New favorite tool 😍