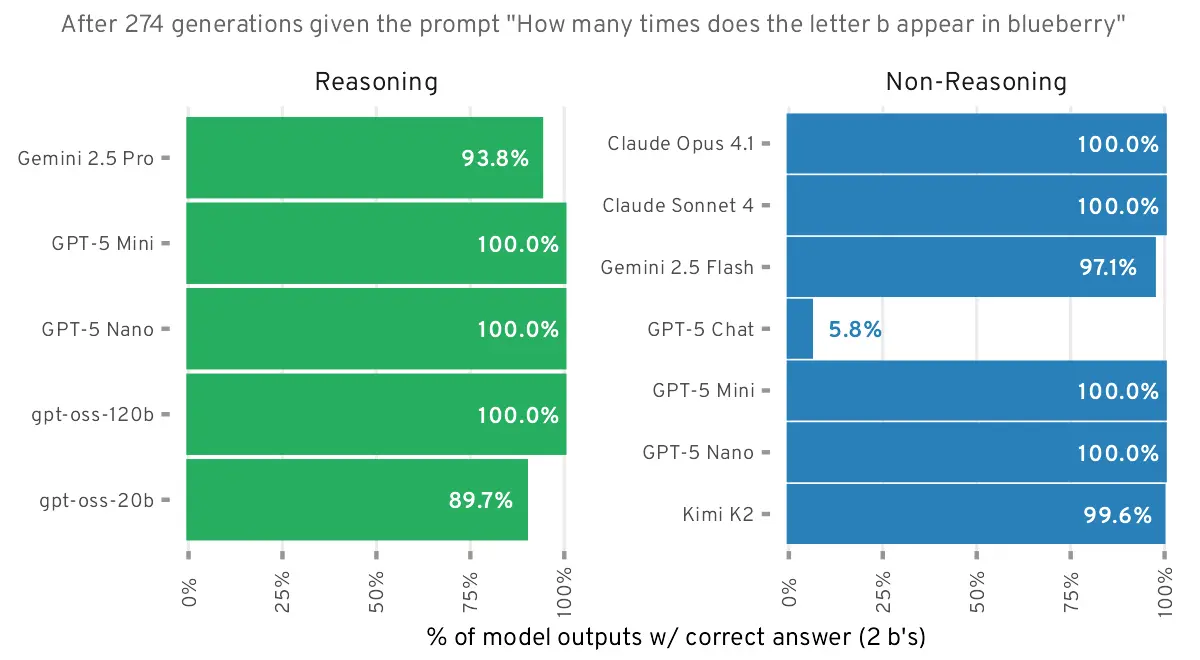

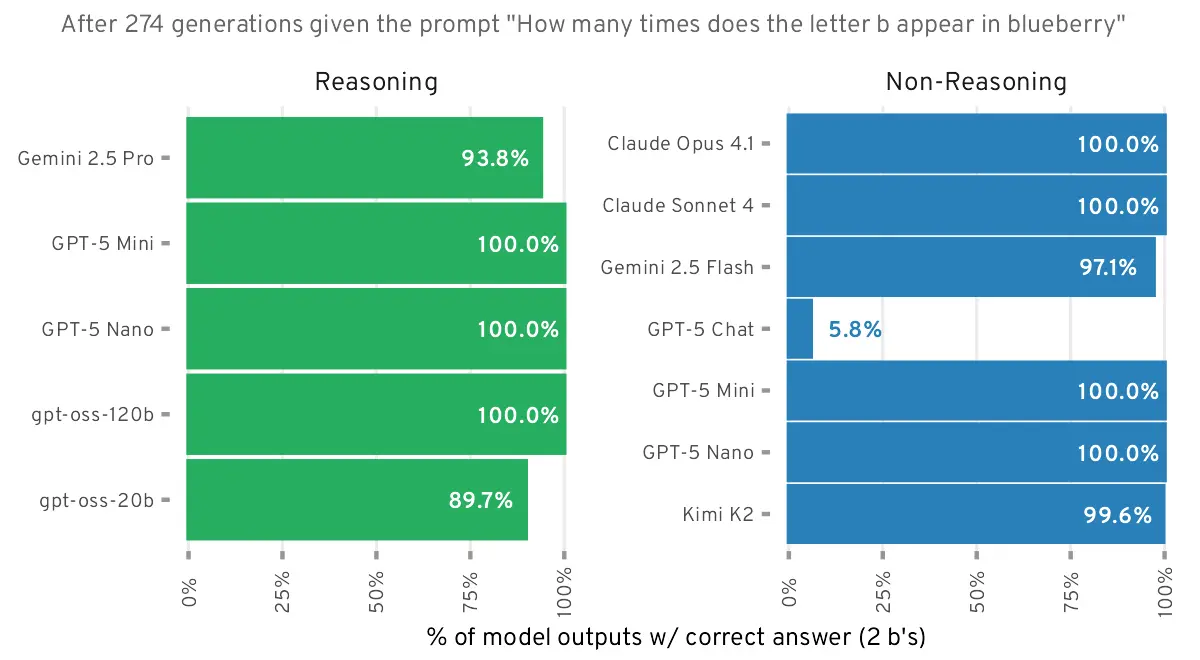

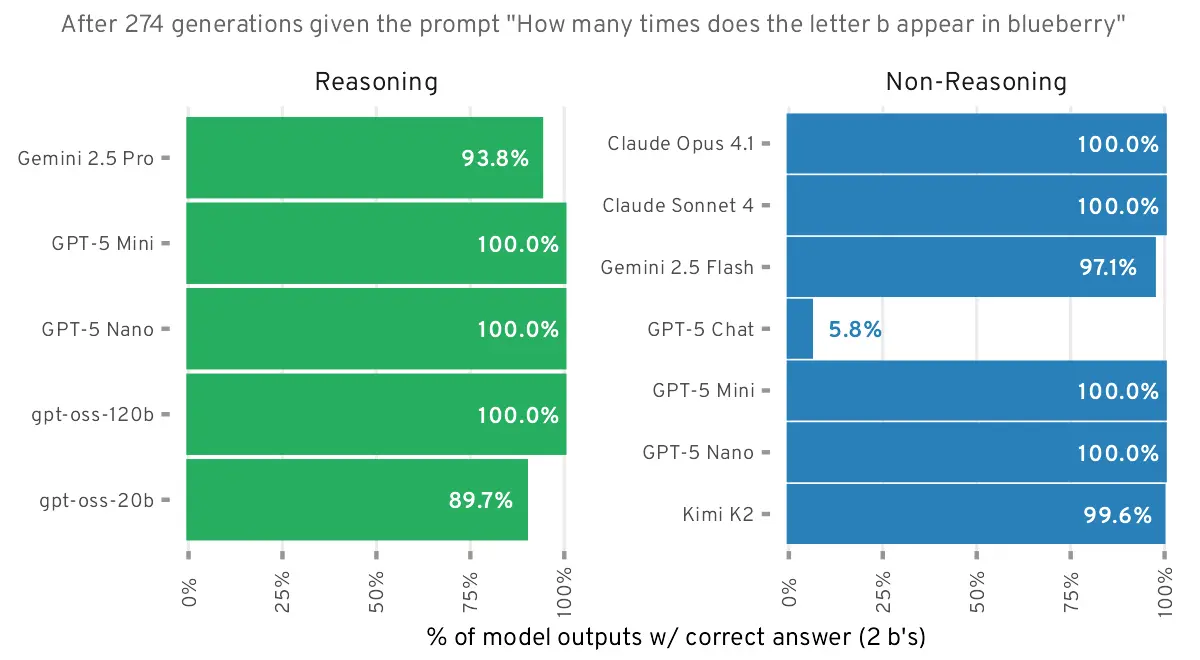

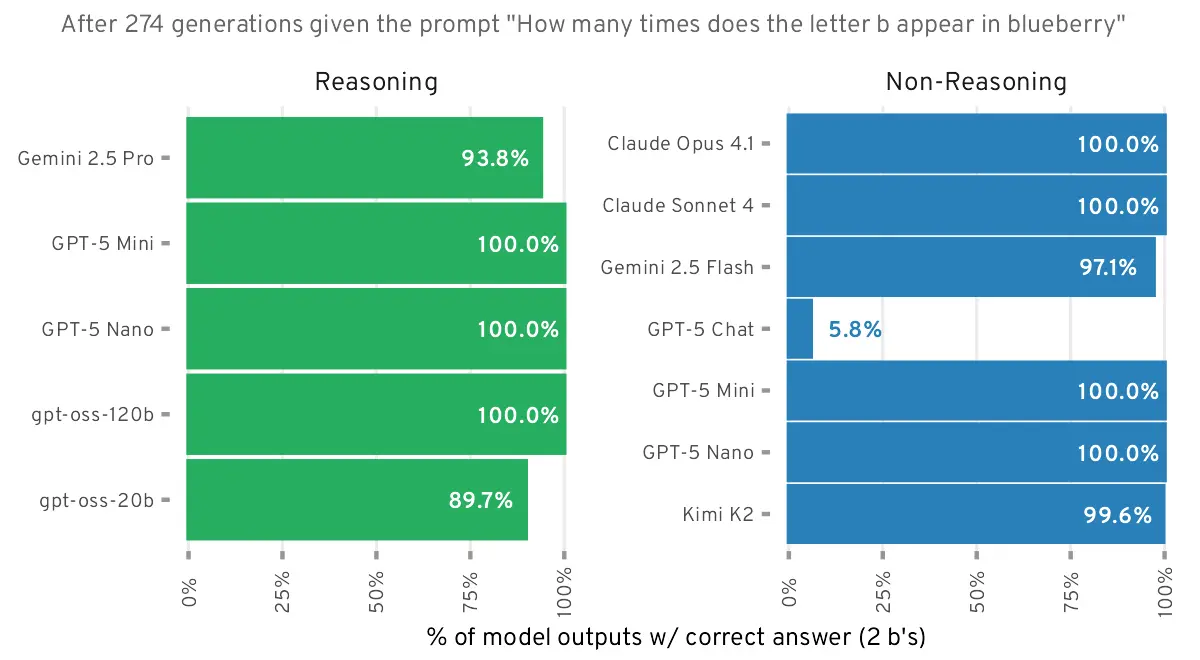

Can modern LLMs count the number of b's in "blueberry"?

Can modern LLMs count the number of b's in "blueberry"?

minimaxir.com

Can modern LLMs actually count the number of b's in "blueberry"?

Can modern LLMs count the number of b's in "blueberry"?

Can modern LLMs actually count the number of b's in "blueberry"?

As if a stochastic parrot could ever be able to count or calculate...

LLM work really well. You get something out of it that resembles human language. This is what it has been designed for and nothing else. Stop trying to make a screwdriver shoot laser beams, it's not going to happen.

https://www.anthropic.com/research/tracing-thoughts-language-model

well that's provably false as the neural network can be traced to understand the exact process and calculations that it follows when doing math. See the above research under heading "Mental Math".

The reason LLMs struggle to count letters is because of tokenization. The text is first converted to tokens, numeric vectors which represents whole words or parts of words, before being given to the LLM, and the LLM has no concept of or access to the underlying letters that make up the words. The LLM outputs only tokens, which are converted back into text.

EDIT: you can downvote me, but facts are facts lol.

There is definitely more going on with LLMs than just direct parroting.

However, there is also an upper limit to what an LLM can logic/calculate. Since LLMs basically boil down to a series of linear operations, there is an upper bound on all of them as to how accurately they can calculate anything.

Most chat systems use python behind the scene for any actual math, but if you run a raw LLM you can see the errors grow faster as you look at higher orders of growth (addition, multiplication, powers, etc.).

So what you're saying is it can't count.

Got it.

What makes you think that using single letters as tokens instead could teach a stochastic parrot to count or calculate? Both are abilities. You can't create an ability only from a set of data no matter how much data you have. You can only make a model seem to have that ability. Again: All you can ever get out of it is something that resembles human language. There is nothing beyond/behind that, by design. Not even hallucinations. Whenever a LLM gives you the advice to eat a rock per day it still works. Because it outputs a correct sounding sentence purely and entirely based on probability. But counting and calculating are not based on probability which is something everyone who ever had a math class knows very well. No math teacher will let students guess the result of an equation.