Fighting pedophilia at the expense of our privacy: The EU rule that could break the internet

Fighting pedophilia at the expense of our privacy: The EU rule that could break the internet

Fighting pedophilia at the expense of our privacy: The EU rule that could break the internet

Hundreds of academics and engineers and non-profit organizations such as Reporters Without Borders, as well as the Council of Europe, believe that the Child Sexual Abuse Regulation (CSAR) would mean sacrificing confidentiality on the internet, and that this price is unaffordable for democracies.

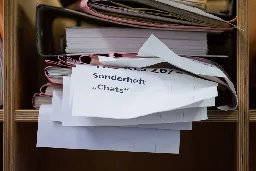

The European Data Protection Supervisor, who is preparing a statement on this for late October, has said that it could become the basis for the de facto widespread and indiscriminate scanning of all EU communications. The proposed regulation, often referred to by critics as Chat Control, holds companies that provide communication services responsible for ensuring that unlawful material does not circulate online. If, after undergoing a risk assessment, it is determined that they are a channel for pedophiles, these services will have to implement automatic screening.

The mastermind behind the billboards and newspaper exhortations calling on Apple to detect pedophile material on iCloud is, reportedly, a non-profit organization called Heat Initiative, which is part of a crusade against the encryption of communications known in the U.S. as Crypto Wars. This movement has gone from fighting against terrorism to combating the spread of online child pornography to request the end of encrypted messages, the last great pocket of privacy left on the internet. “It is significant that the U.S., the European Union and the United Kingdom are simultaneously processing regulations that, in practice, will curtail encrypted communications. It seems like a coordinated effort,” says Diego Naranjo, head of public policies at the digital rights non-profit EDRi.